Machine learning advances human-computer interaction

Inside the University of Rochester’s Robotics and Artificial Intelligence Laboratory, a robotic torso looms over a row of plastic gears and blocks, awaiting instructions. Next to him, Jacob Arkin ’13, a doctoral candidate in electrical and computer engineering, gives the robot a command: “Pick up the middle gear in the row of five gears on the right,” he says to the Baxter Research Robot. The robot, sporting a University of Rochester winter cap, pauses before turning, extending its right limb in the direction of the object.

Baxter, along with other robots in the lab, is learning how to perform human tasks and to interact with people as part of a human-robot team. “The central theme through all of these is that we use language and machine learning as a basis for robot decision making,” says Thomas Howard ’04, an assistant professor of electrical and computer engineering and director of the University’s robotics lab.

Machine learning, a subfield of artificial intelligence, started to take off in the 1950s, after the British mathematician Alan Turing published a revolutionary paper about the possibility of devising machines that think and learn. His famous Turing Test assesses a machine’s intelligence by determining that if a person is unable to distinguish a machine from a human being, the machine has real intelligence.

Today, machine learning provides computers with the ability to learn from labeled examples and observations of data—and to adapt when exposed to new data—instead of having to be explicitly programmed for each task. Researchers are developing computer programs to build models that detect patterns, draw connections, and make predictions from data to construct informed decisions about what to do next.

A natural language model developed in the Robotics and Artificial Intelligence Laboratory allows a user to speak a simple command, which the robot can translate into an action. If the robot is given a command to pick up a particular object, it can differentiate between other objects nearby, even if they are identical in appearance.

The results of machine learning are apparent everywhere, from Facebook’s personalization of each member’s NewsFeed, to speech recognition systems like Siri, e-mail spam filtration, financial market tools, recommendation engines such as Amazon and Netflix, and language translation services.

Thomas Howard is pictured with a Baxter robot in his lab in Gavett Hall (University photo / J. Adam Fenster)

Howard and other University professors are developing new ways to use machine learning to provide insights into the human mind and to improve the interaction between computers, robots, and people.

With Baxter, Howard, Arkin, and collaborators at MIT developed mathematical models for the robot to understand complex natural language instructions. When Arkin directs Baxter to “pick up the middle gear in the row of five gears on the right,” their models enable the robot to quickly learn the connections between audio, environmental, and video data, and adjust algorithm characteristics to complete the task.

What makes this particularly challenging is that robots need to be able to process instructions in a wide variety of environments and to do so at a speed that makes for natural human-robot dialog. The group’s research on this problem led to a Best Paper Award at the Robotics: Science and Systems 2016 conference.

By improving the accuracy, speed, scalability, and adaptability of such models, Howard envisions a future in which humans and robots perform tasks in manufacturing, agriculture, transportation, exploration, and medicine cooperatively, combining the accuracy and repeatability of robotics with the creativity and cognitive skills of people.

“It is quite difficult to program robots to perform tasks reliably in unstructured and dynamic environments,” Howard says. “It is essential for robots to accumulate experience and learn better ways to perform tasks in the same way that we do, and algorithms for machine learning are critical for this.”

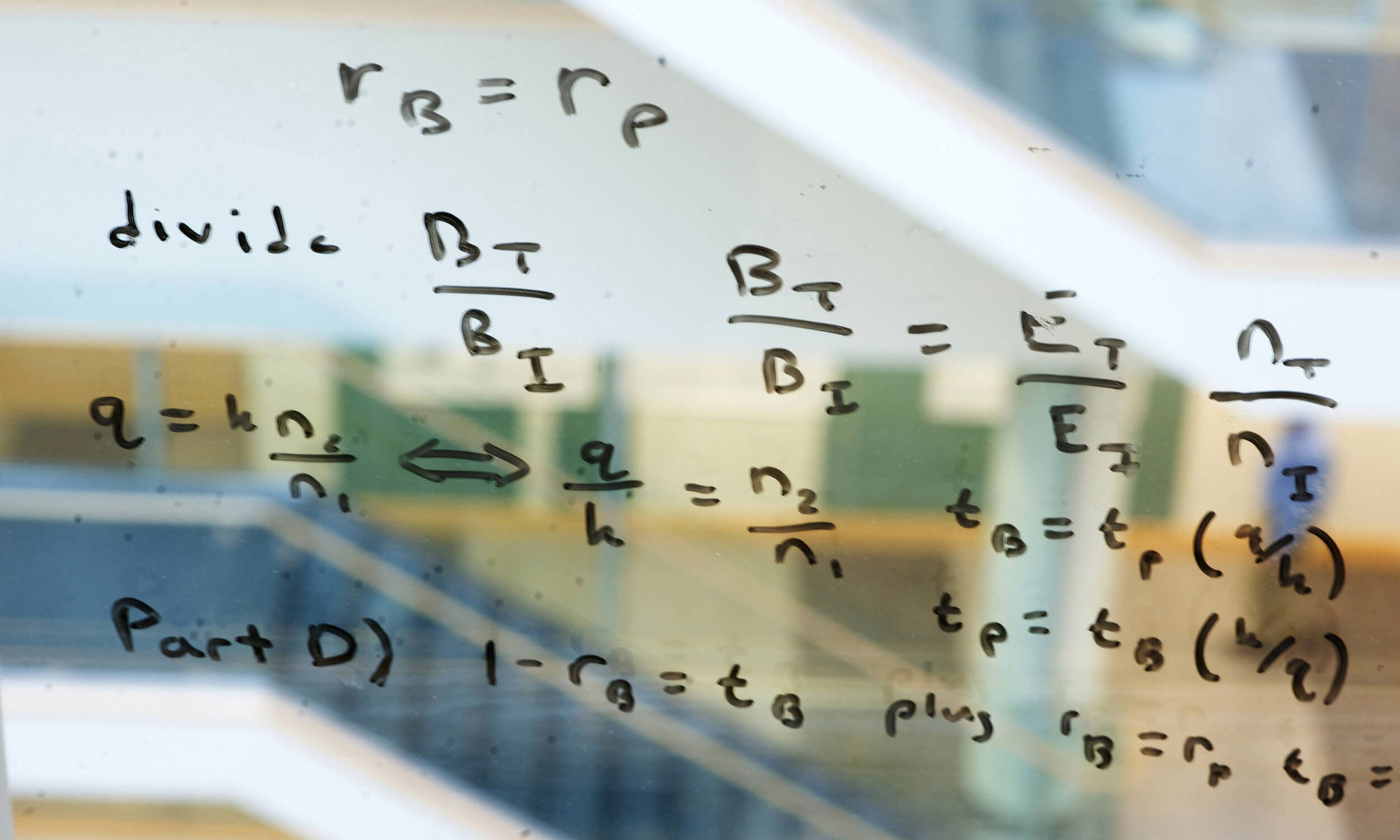

Jake Arkin, PhD student in electrical and computer engineering, demonstrates a natural language model for training a robot to complete a particular task.

A robotic hand with brains

Gripping a pencil is not the same as picking up a baseball, especially for robots, which lack the versatility and adaptability of the human body. So Michelle Esponda ’17 (MS), who completed her master’s thesis in biomedical engineering in the Howard lab, created a prosthetic robotic hand that uses machine learning to identify the proper grasp.

You can help

To learn how you can support robotics research, contact Eric Brandt ’83, Executive Director of Advancement for the Hajim School, at (585) 273-5901.

—Margaret Bogumil, February 2018 (edited from original stories and video by Lindsay Valich and Matt Mann)