Giving virtual reality a ‘visceral’ sound

Most work to date on VR/AR technologies has focused on the 3-D visuals, says Zhiyao Duan, an assistant professor of electrical and computer engineering. University of Rochester researchers and Eastman School of Music musicians are working together to make the quality of the audio experience as high as the visuals. (Getty Images photo)

Virtual reality (VR) uses advanced display and immersive audio technologies to create an interactive, three-dimensional image or environment. Augmented reality (AR), meanwhile, uses digital technology to overlay video and audio onto the physical world to provide information and embellish our experiences.

At the University of Rochester, we’re crossing disciplines to collaborate on VR/AR innovations that will revolutionize how we learn, discover, heal, and create as we work to make the world ever better.

Imagine what it would be like to sit, not in the audience, but in the midst of an orchestra during a rehearsal or concert. To experience firsthand the sounds of string, brass, woodwind, and percussion sections washing over you from every direction. To see the conductor, not from behind, but pointing the baton directly at you.

Using recital halls as their “labs,” and recording some of the best music students in the world, University of Rochester researchers are creating virtual reality videos of concerts that literally immerse viewers “within” the performance onstage.

“Instead of just watching a movie of the concert, you can put headphones on and walk around among the performers,” says Matthew Brown, a professor of music theory at the University’s Eastman School of Music, and one of the researchers on the project. “The more you get inside the performance, the more visceral and exciting the music becomes. This is very different from the normal video you see on YouTube.”

The quality of the sound, of course, is at least as important as the quality of the images. And that’s where this collaboration hopes to make a major contribution.

“Previous AR/VR research has focused mainly on the visual side,” says Zhiyao Duan, an assistant professor of electrical and computer engineering, who is working on the project with Brown and Ming-Lun Lee, an assistant professor of audio and music engineering. “You see the 3-D, but you don’t really hear the audio change when you move your head or look at different objects. The purpose of this proposal is adding 3-D sound. That’s what’s novel here.”

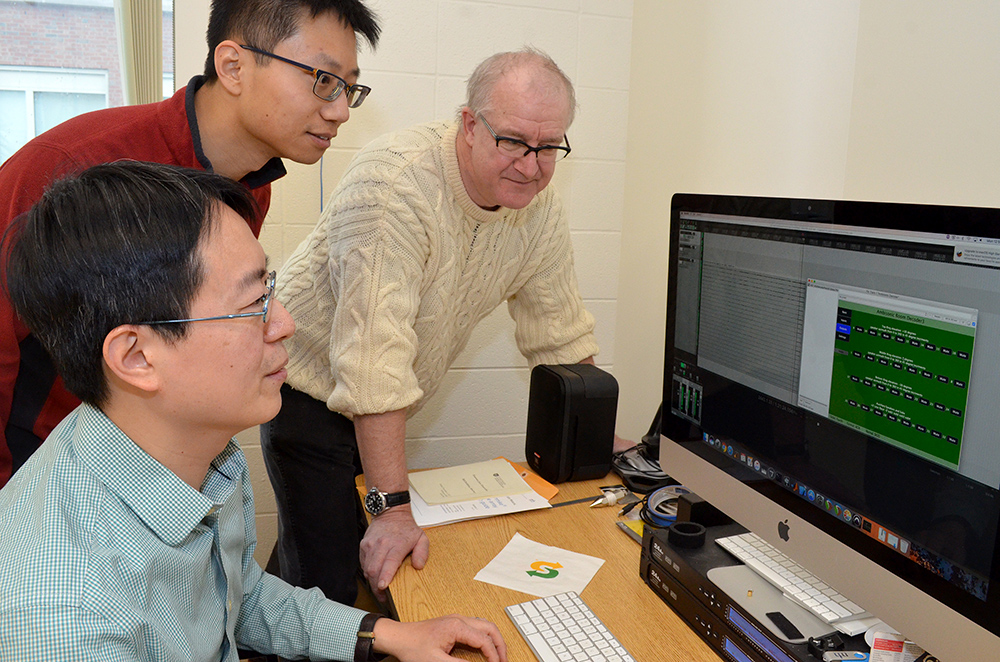

From left, Ming-Lun Lee, Zhiyao Duan, and Matthew Brown look at sound level readouts from a microphone in a sound studio they’ve set up in the Computer Studies Building. (University of Rochester photo / Bob Marcotte)

Duan’s expertise is in designing intelligent algorithms and systems that can understand sounds (including music) and be applied, for example, to audio-visual analysis of music performances. Meanwhile, Lee, who has degrees in both electrical engineering and musicology, is an expert in audio software programming and sound recording techniques and technology. He is also an active choral director and baritone.

“That’s the strength of this project, because in addition to having access to Eastman and its students, we’ve got the expertise in electrical and computer engineering, and audio and music engineering,” says Brown. Also part of the team is Christopher Winders, who has a PhD in composition from Eastman and helped Brown pioneer TableTopOpera, a music ensemble that produces innovative multimedia performances, including “comic book operas.”

With funding from the University’s AR/VR Initiative, the team has obtained cutting-edge recording equipment, including an Eigenmike 32-channel spherical microphone array. This past semester, they were able to make 11 recordings of Eastman ensembles in live concerts, as well as in rehearsals, where there is greater freedom to experiment.

Much of the effort so far has focused on perfecting what Lee refers to as the “workflow.” For example, “Where do we want to place a microphone? At the best seat in the concert hall? Or do you want to put it over the conductor’s head?” asks Lee. The answer may vary depending on the acoustics of the particular hall, and the size and type of ensemble.

Eventually Duan will develop algorithms to best integrate the audio and visual files.

The researchers envision a host of possible applications, from enhanced listener enjoyment to feedback for performers, live streaming of University concerts, and music therapy for patients.

“We read stories that classical music is losing its relevance,” Brown says. “This is a way to make the experience of classical music more exciting than just sitting and watching a bunch of people in penguin suits performing. The idea that you could interact with the environment, and move around in it, strikes me as being a really powerful way for us to make the music more relevant to younger audiences.”

Bob Marcotte, February 2018 (Read the original Newscenter story here)