The mysteries of music—and the key of data

There’s much that’s mysterious about music.

“We don’t really have a good understanding of why people like music at all,” says David Temperley, professor of music theory at the University of Rochester’s Eastman School of Music. “It doesn’t serve any obvious evolutionary purpose, and we don’t understand why people like one song more than another or why some people like one song and other people don’t. I don’t think we’re anywhere near uncovering all of the mysteries of music but there are a lot of questions that people are starting to answer with data science.”

Unlocking big data

Researchers at the University are at the cutting edge of this intersection of data science and music, developing databases to study music history and perfecting ways in which computers can automatically identify a genre or singer, model aspects of music cognition and extract the emotional content of a song, predict musical tastes, and offer tools to improve musical performance and notation.

As Temperley says, “There is a lot you can quantify about music.”

Mimicking Human Music Recognition

In 2014 the family of the late Marvin Gaye filed a suit against Robin Thicke, alleging Thicke’s 2013 pop song “Blurred Lines” infringed on Gaye’s 1977 “Got to Give It Up.” Analysts compared sheet music and studio arrangements to assess similarities and differences between the songs, ultimately awarding judgment in favor of Gaye’s children.

What if there was an accurate way for computers to identify these comparisons between vocal performance and performance styles?

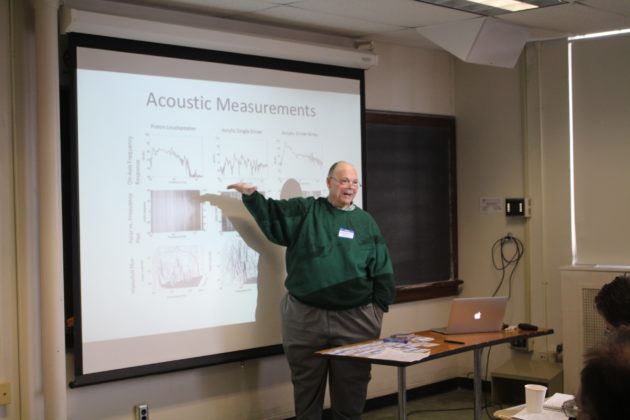

Mark Bocko, professor and chair of the department of electrical and computer engineering, is working toward that goal. He brings his combined love of music and science to the study of subjects ranging from audio and acoustics, to musical sound representation and data analytics applied to music.

Bocko and his group have been using computers to analyze digitally recorded music files, with the goal of better understanding and mimicking the ways in which humans are able to recognize specific singers and musical performance styles. The project has applications not only in settling copyright disputes, but also in training musicians, studying trends in the development of musical styles, and improving music recommendation systems.

Mark Bocko gives a presentation during a workshop for the North East Music Informatics Special Interest Group (NEMISIG). The University hosted the workshop in February, which is an annual event—with a rotating schedule of host universities—that brings together researchers who work on music information retrieval.

“When people listen to recorded music, they can recognize their favorite performers quickly,” Bocko says. “Human listeners can also listen to recordings and quickly make high-level judgments such as ‘Michael Bublé sounds a lot like Frank Sinatra.’ We’re studying what it is that people identify in the musical sound that might lead them to identify similarities in the performance styles of different musicians or to identify specific singers.”

Toward that end, Bocko and his team use audio processing algorithms developed in his and other research labs around the world. An MP3 file of a song is good for reproducing sound for listeners, but this format does not allow researchers to easily identify properties such as pitch modulation, loudness contours, or tempo variations. Using a variety of audio signal processing algorithms, computers can extract such information from sound recordings.

Further analysis of the data enables researchers to detect subtle structures. For instance, the computer can extract the pitch of every note in a song to show where, and in what ways, the singer took liberties. For example, if the frequency of a note in the written music is 220 Hertz, a singer might modulate the frequency in a technique called vibrato, which is intended to add warmth to a note. A singer might also drag slightly behind the tempo of the instruments, giving the song a more relaxed feel.

(credits: iStock and freeimages.com)

“If you add together all of those little details, that defines the style of a performer and that’s what makes it music,” Bocko says. “The detailed structure in the very subtle changes, such as in timing and loudness, can really change the feel of a piece.”

Using data analysis tools from genomic signal processing, similar to that which is used to study sequences in DNA, Bocko and his team search musical data for recurrent patterns—called motifs—in the subtle inflections of various performers and performance styles.

“It’s quite similar to DNA sequencing,” Bocko says. “You dig through all of this data looking for patterns that repeat throughout a performance.”

Bocko and his team coded motifs, and stored them in motif banks, for a number of performances. They then created computer programs to compare motif banks. In this way, they could demonstrate that Michael Bublé really does have a singing style similar to Frank Sinatra’s, but less similar to Nat King Cole’s.

This approach may ultimately enable computers to learn to recognize the subtle nuances between singers and musical performances that human beings are able to pick up simply by listening to the music.

And, it may offer quantifiable evidence of the similarities between “Blurred Lines” and “Got to Give It Up.”

Transcribing Music Automatically

Imagine you are a pianist and you hear something you would like to play—such as an improvised blues solo or a song on YouTube—for which there’s no score. Then imagine that instead of having to listen to the piece over and over again and transcribe it yourself, a computer would do it for you with an impressive degree of accuracy.

Zhiyao Duan, assistant professor of electrical and computer engineering, together with PhD student Andrea Cogliati, has been working with Temperley to extract data from songs and use that data to produce automatic music transcriptions—in effect, feeding audio into a computer and allowing the computer to generate the music score.

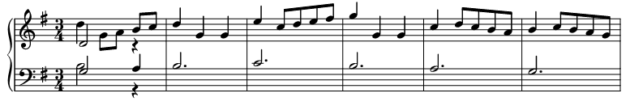

Human notation of a Bach Minuet

A computer notation of the same Bach Minuet utilizing Zhiyao Duan’s automatic music transcription

Most commercial programs are only able to convert MIDI (Musical Instrument Digital Interface) performances, recorded via a computer keyboard or other electronic device, into music notation. MIDI files do not represent musical sound, but are data files that provide information—such as the pitch of a note over time—that tells an electronic device how to generate a sound. Recent methods developed in the research community are able to convert audio performances into MIDI, yet the level of accuracy isn’t sufficient for the MIDI to be further converted into music notation.

Duan’s program records a performance and transcribes it all the way from instrumental audio to MIDI file to music notation with a great degree of accuracy. Upon comparing his methods to existing software programs, in a blind test in which music theory students evaluated the accuracy of the transcripts, “Our method significantly outperformed the other existing software in the pitch notation, the rhythm notation, and the placement of the notes,” Duan says.

Duan’s ultimate goal is to offer this software for commercial use, where it can help users to spot errors in a performance, search for pieces that have similar melodies or chord progressions, analyze an improvised solo, or notate it for repeated playing.

Duan and his team prerecord each note of a piano to act as a template for the computer—in essence, teaching the computer the various notes. Each prerecorded note is known as an atom. The computer code reconstructs a performance by identifying the notes the performer played and putting together the corresponding atoms in the correct sequence to create a musical notation transcript.

Duan uses signal processing and machine learning to help the computer identify the pitch and duration of each note and translate it into music notation. There’s one pitfall to his algorithm, however. The same piano note can be notated in more than one way; the black key between a G and an A on a keyboard, for example, can be called either a G sharp or an A flat. In order to generate an accurate transcription and determine the note’s proper notation, the computer must also be programmed to identify the proper rhythm, key, and time signature.

That’s where Temperley and his students at the Eastman School come in.

“We’re working on the idea of using musical knowledge to help with transcription,” Temperley says. “If you know something about music, then you know what patterns are likely to occur; and then you can do more accurate transcription.”

A recording of a human being playing a Chopin piece vs. an acoustic rendering of the computer’s transcription of the performance.

Rock Song and Wikipedia Corpus

While Duan and Bocko work on the engineering side, designing algorithms to extract information from music, researchers at the Eastman School often provide ground-truth data that informs those algorithms.

“Most machine learning tools have to be trained in some way on some direct data and then they can use that to analyze new data,” Temperley says. “We’re trying to develop a dataset of correct data that a machine learning system can then be trained on.”

Temperley and his team at the Eastman School are creating a database of rock songs and analyzing the harmonies and melodies by hand. This can be useful for tasks such as classifying songs by genre, training systems to extract melodies, or using “query by humming” databases, where users can hum a tune into a computer that then finds the song.

Darren Mueller, assistant professor of musicology at the Eastman School, is creating a corpus of information based on a large-scale data analysis of Wikipedia’s coverage of various musical performers and genres.

Did more people start posting on Beyonce’s Wikipedia page after her 2013 Superbowl appearance? Why is there more information on Wikipedia about an obscure jazz record than there is about an early-career Mozart piece? Do male composers have more Wikipedia entries than their female counterparts?

Using computer algorithms and machine learning, Mueller hopes to analyze how information about music is distributed, who is using Wikipedia, and the types of information being posted. He also hopes to show that Wikipedia can be a valuable source of information, if only as a springboard for defining a concept or identifying the drummer in a particular band.

“I come from the humanities, which tends to value close reading: taking a text or a musical score and looking at the details to see what they might say about larger issues,” Mueller says. “In data science, it’s the opposite. Data science is taking a lot of information and putting it through different algorithms to come up with different trends and patterns.”

Mueller envisions compiling the data in an online tool for music scholars. He also foresees using the data to improve algorithms for extracting similarities between pieces and musicians, such as in the work of Bocko and his group.

“Usually musicians are a little skeptical when anyone is, like, ‘Oh, I want to quantify music,’ because they put their hearts and souls into music,” Mueller says. “It’s their art and there’s always this sort of tension between the arts and science, but there’s no reason these two things can’t work together.”

You can help

To learn how you can support audio and music engineering research, contact Eric Brandt ’83, Executive Director of Advancement for the Hajim School, at (585) 273-5901, or Cathy Hain, Assistant Vice President for Advancement for the Eastman School of Music, at (585) 274-1045.

—Lindsey Valich, March 2017